World's highest resolution lidars + cameras + radars

Brain power is the

New horsepower

Transformer-based learning from human driving

Beyond RoboTaxi

Tensor combines multiple long- and short-range sensing technologies to virtually eliminate blind spots, cross-verify data, and maximize perception accuracy. This diversity ensures reliable performance in low light, glare, bad weather, or even when individual sensors are obstructed.

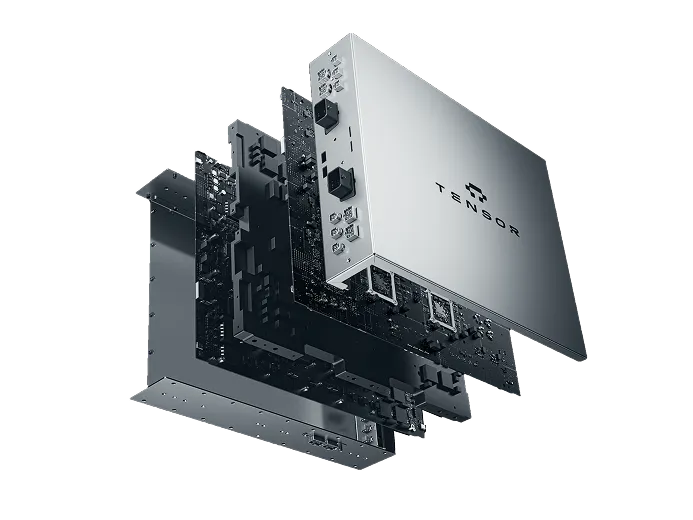

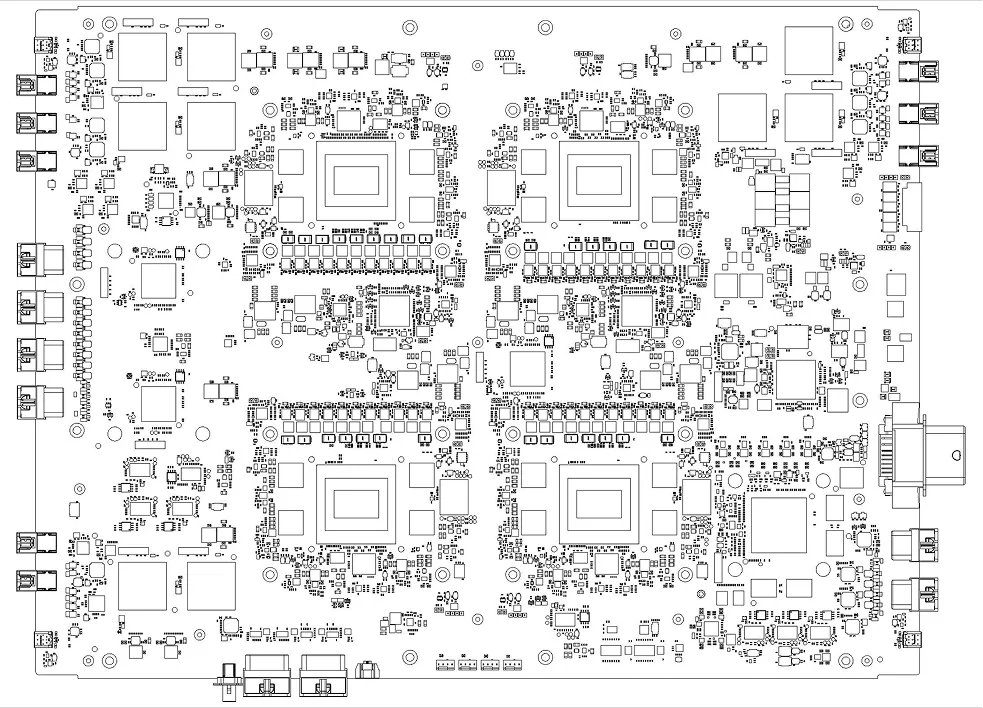

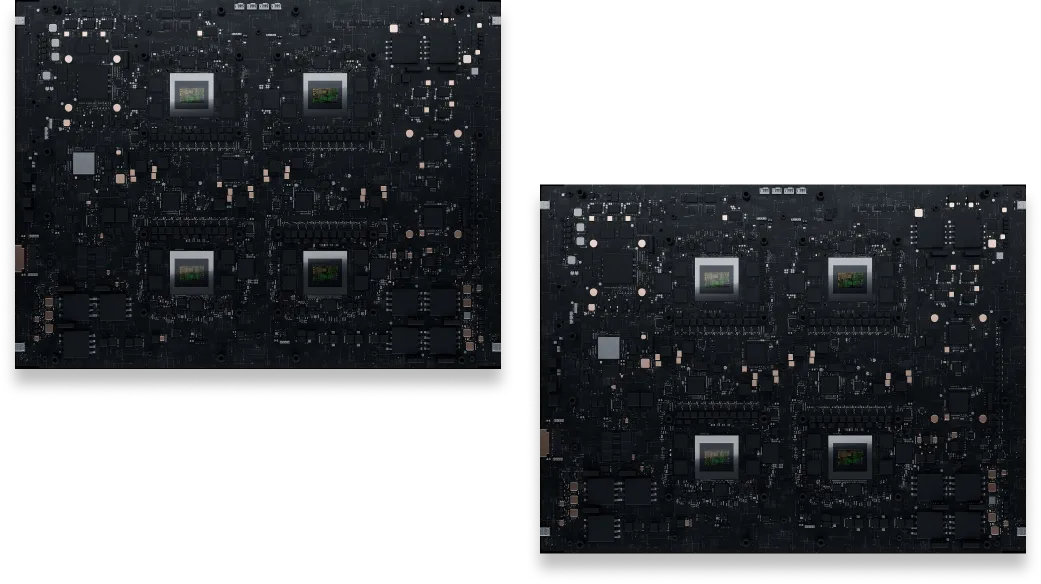

Powered by eight NVIDIA DRIVE AGX Thor SoCs based on the cutting-edge Blackwell GPU architecture, Tensor delivers over 8,000 TOPS of GPU performance — the most of any vehicle — with triple-layer safety redundancy for Level 4 autonomy.

Alongside Nvidia’s processors, Tensor’s supercomputer integrates advanced chips from Texas Instruments, NXP, and Renesas — adding layers of safety, security, and real-time performance that meet the highest ASIL-D automotive standards.

With 10 GPUs, 144 CPU cores, and high-speed processors streaming over 53 gigabits of sensor data per second (~1,000x faster than typical home internet), Tensor delivers the real-time intelligence needed for advanced autonomy.

The Tensor autonomous driving system is powered by our advanced AI built on top of the state-of-the-art neural network algorithms — the Tensor Foundation Model.

Tensor’s AI is entirely data-driven, learning every aspect of driving—including perception, prediction, and planning—from vast amounts of real-world and simulated data.

Scaling our deep learning approach demands extensive computing infrastructure and vast amounts of high-quality data. Tensor heavily invests in infrastructure for large-scale data collection, visualization, ground-truth generation, machine learning training and evaluation.

Powered by Oracle—one of the world’s largest cloud providers—we’ve built an extensive data-processing infrastructure, enabling continuous performance improvement.

Our multi-model AI leverages sensor fusion, integrating video streams from ultra-high-resolution cameras, lidars, radars, and directional audio microphones. This combination significantly enhances performance—both in precision and reliability—particularly in challenging conditions such as severe weather, sun glare, or nighttime driving.

Tensor’s AI is trained on data collected by expert drivers using a full sensor suite to capture ideal driving behavior. Advanced imitation learning algorithms replicate these optimal actions, enabling the AI to handle complex scenarios with human-like skill and adaptability.

Tensor’s AI uses advanced Transformer-based neural networks to understand and predict the behavior of drivers, cyclists, and pedestrians in real time. By analyzing how road users interact with each other, it makes intelligent, natural, and safe driving decisions in complex scenarios.

Tensor’s AI combines two complementary systems: fast, instinctive reactions for real-time driving and deep, deliberate reasoning for complex or rare scenarios. Powered by a multimodal Visual Language Model running entirely on its onboard supercomputer, it excels at recognizing and navigating unexpected “corner cases” with human-like judgment.

Tensor’s AI blends High-Definition 3D maps with live camera and lidar data, using maps when reliable and instantly switching to real-time perception when they’re not. This dual approach combines the foresight of pre-mapped roads with the adaptability of on-the-spot recognition, ensuring safe, accurate driving in any situation.

Validated through real-world testing, massive datasets, and high-fidelity simulations, Tensor’s Foundation Model delivers state-of-the-art perception, prediction, and planning. Its proven performance ensures safe navigation even in the most complex, dynamic environments.

Tensor’s software features primary, secondary, and emergency layers to ensure safe operation in any scenario. If issues arise, control shifts to backup systems or an emergency stop, with a Minimal Risk Maneuver guiding the vehicle to the safest possible state.

Tensor’s independent Automatic Emergency Braking system uses near-range lidar to detect imminent collisions and intervene at the last moment. This approach greatly improves reliability in challenging conditions like nighttime or glare, providing dependable protection when it’s needed most.

Tensor’s collision detection system combines lidar, Inertial Measurement Units, and 16 mechanical-wave-based sensors to identify incidents with precision. By integrating this data, the AI helps prevent secondary injuries, enhancing safety for both occupants and other road users.

When most people hear the term "self-driving car," they envision a personal vehicle capable of full autonomy. However, today’s Level 4 autonomous vehicles are mostly RoboTaxis—vehicles that return to a centralized depot frequently for onerous maintenance, sensor cleaning, software updates, frequent hardware checks and replacement. In other words, today's RoboTaxi fleets rarely leave the careful supervision of their operators, making them fundamentally different from the personal Robocars consumers truly desire.

Unlike RoboTaxis built solely for full autonomy, Tensor’s personal Robocar is the world’s first to provide all levels of autonomy with seamless flexibility:

• Level 0 manual driving

• Level 2 assisted driving

• Level 3 conditional self-driving

• Level 4 complete autonomous driving

Tensor’s dedicated hardware, software, and intuitive user interface are designed from ground up specifically for these personal use cases, natively supporting Level 4 autonomy while effortlessly accommodating Level 0, Level 2 and Level 3 driving modes. This means you get a unified, easy-to-use experience across all driving scenarios without confusion or complexity, whether you want to drive yourself, get a little help, or let your car handle the entire journey.